As discussed in Part 1 of this AI discussion, China’s central government plans to achieve AI breakthroughs by 2025 and world AI dominance by 2030. If the DoD’s past acquisition track record doesn’t change, it could be twenty years before significant AI technology is actually deployed to military units. But a more important question is how can AI technology provide significant value to the military? It is always easy to assume a new super hyped technology will add significant value to military warfare, but over time the more important reality becomes how to best make use the technology for current or new deployment strategies.

Because the current state of AI relies primarily on convolutional neural networks, that are heavily reliant on large amounts of use case specific training data, the results are often brittle and return unintended errors. Depending upon how use case errors are detected and diverted, current AI systems can create catastrophic failures. In March of last year a second Tesla driver was killed by driving under a crossing truck, because the autopilot was fooled by early morning sunlight on the truck trailer. While traffic deaths, like these, represent critical AI failures, one can imagine much worse scenarios if AI is allowed to control military force deployments and/or weapon delivery decisions, where almost every situation is significantly different!

Another way to understand AI is to change the way we think about it by being more specific about the names or labels we use to describe the technology. Augmented Intelligence or Intelligence Augmentation (IA) are terms being used to more accurately describe the way we use AI to support or enhance our human intelligence. IA reverses the letters to prevent confusion with the more general AI acronym. Augmented Reality (AR) can be an example of IA and may become the most significant realization of applied IA over the next decade.

Most of us consider our smartphones to be memory upgrades, as they guide us through our daily calendar, contacts, messages, navigation, and internet searches throughout the day. IKEA Place is a new smartphone or tablet application using AR to show a potential buyer how a new piece of furniture will look and fit in an intended room at home. How many of us have realized too late that a purchased piece was too large or too small for the intended room? IKEA combines AI and AR to deliver to customers this powerful improvement to the simple use case of furniture shopping. For that they are predicting significant revenue increases to their bottom line.

Another common successful AI use case is Natural Language Processing (NLP) where research dates back to the 1950’s. Thanks to that research, much of it from DARPA, this is an AI area that is commonly deployed to help us control functions in our cars, homes, and smartphones. As mentioned in Part 1 on AI, NLP is close to delivering universal language translation. One can only guess at the amazing impact NLP will have on global education and learning, as knowledge in any language becomes available to anyone interested.

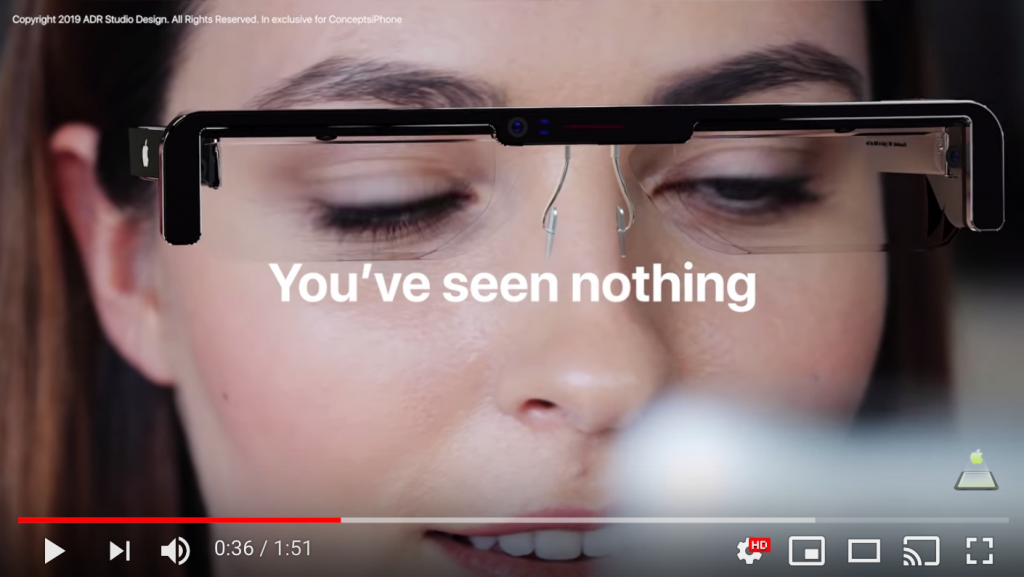

Virtual Reality (VR), unlike AR, is an immersive experience because the wearer only sees what the headset screen delivers to the user. VR and associated headsets products are a big commercial market for gaming and some use cases, like previewing travel destinations. Alternately, the new commercial hope for IT devices is AR glasses, also called smart glasses. Some will remember that Google introduced Google Glass in 2013 to open this new market. Because of limited uses and limited computer power, they failed to gain a following and were pulled from the market in 2015. Google then introduced Google Glass Enterprise in 2017, as industrial only glasses, designed to augment complex manufacturing, assembly-line, and other industrial AR use cases.

Today, smaller high-power electronics appear to make smart glasses a near term reality. Giants, Apple and Intel, are set to release smart glasses products sometime in 2020. Intel has uncharacteristically decided to enter this commercial market estimating they are better able to optimize the micro size electronics required to make the glasses look normal. Their glasses claim safe delivery of the AR image directly onto the user’s eye retina. Other companies are working on AR contact lenses, which will eventually put all of us into sci-fi movie territory!

Like the heads up AR display in F-35 pilot helmets, one can imagine wearing smart glasses to navigate our daily lives, providing augmented information to help us choose among nearby restaurants, find office locations after parking our car, or letting us know if our health parameters are good or bad at any instance in time. The good news is that augmented IA smart glasses leave the user in charge of their actions and could even help prevent accidents. For the Department of Defense, the challenge continues to be where the best early use cases for AI or IA technologies may lead our Country’s National security?

One answer might be the current research work on Explainable AI, which is an important effort to allow users to understand how AI algorithms or AI models arrive at decisions and/or recommendations. Current convolutional neural network AI/ML algorithms are effectively black boxes that take in inputs and deliver decision outputs without access to the reasoning inside. In warfare, however, even if command & control systems (C2) are using AI to augment decision options for positioning forces, or authorizing the use of weapons, commanders are held accountable for their actions and the results, and must be able to justify how they derive each decision.

One small startup Company, Z Advanced Computing, Inc (ZAC), has been working to develop a form of explainable AI to prevent AI/ML brittle false alarms, and reduce the size of training data sets. ZAC is applying explainable AI to both the commercial market, for searching visual images, like shoes or clothing, and is more recently supporting the the U.S. Air Force to automate finding military information from video and visual images. ZAC’s technology claims to mimics how humans discover, recognize, and learn reusable, modular, cumulative, and scalable knowledge.

Lastly, consider the long standing holy grail of AI’s potential future, Artificial General Intelligence (AGI). AGI is the idea of machines that think and reason with human-like results. Current estimates place effective AGI availability out somewhere between 20 to 200 years from now, meaning that solid estimates are not available. One could imagine a working AGI system to be like the HAL-9000 computer from the infamous 1968 movie, 2001: A Space Odyssey. In the movie HAL, the heart of the spaceship’s control system, kills the ship’s crew and then tries to kill the flight commander, all because HAL had been ordered to tell a lie, an order that broke HAL’s prime directive. Emotional images, like those portrayed in this movie, partly account for our natural fear of AI. Elon Musk has called AI, humanity’s “biggest existential threat.” He and others rationally recommend that our government spend a couple of years, better understanding AI, before it becomes deployed in ways that could make dangerous societal mistakes.

Until explainable AI or AGI technologies become more widely available, current IA is being explored to help augment C2 warfare functions, such as language translation using NLP, and AI/ML data tracking to improve situation assessment. Done properly, these functions can augment C2 support to the warfare commanders without relieving them of their responsibility to apply experience and knowledge to make good decisions.

…But it could turn out that F-35 like AR and smart glasses will become the more practical military AI capability in the foreseeable future…

Thank you Marv. Extremely interesting and finely written.

IA. Acronym collision. Good piece, Marv, keep them coming and see you soon.

Hi Marv

Great article. I’d like to add two points for your consideration:

1/ Ban AI & Machine-based Control Of All Mission Critical Systems

Your article points to the Tesla accident as an example of AI going wrong however I think a bigger danger is any type machine based control. The current 737 Max grounding and recent USS John McCain accident didn’t involve AI but had systems that humans could not control. The DoD must set new design requirements for all AI systems to mandate manual override functionality. Moverover Congress should ban AI based killing for all new weapons systems. If a trigger has to be pulled, it must be by a person in uniform.

2/ Proactively Engage Inventors & Young Entrepreneurs

Your article points to lots of new AI/IA/AR tech that the US Navy should evaluate. I bet if someone did an analysis of OTA grants they’d find the bulk of the money going to either former DoD personnel or existing DoD vendors and not new people with “outside the box” ideas. The US Navy needs a new process designed to work with smart people who might not even have a company. For example, when the Navy finds a great idea perhaps they can assign a “buddy” to help identify the appropiate funding vehicle, fill out the paper and then make sure the vendor gets paid. Such a program would be a win-win if the Navy assigned young recruits to work with young entrepreneurs. America has great people that can solve any problem given to them if only asked.

Junaid

Good points Junaid, thanks for the add…

Thoughtful piece, well written Marv.

AI promises to be a force multiplier, and DoD applications will benefit by the skillful exploitation of AI innovation. The challenges are clear (we are in the infancy / early adoption phase).

As you point out, large datasets are needed in to reduce decision ambiguity – this information will take considerable time to acquire (and the threats are ever changing) for complete automation.

Perhaps the appropriate application is to exploit AI for mundane, repetitive actions (and then to detect security / exploits against those actions).

The Boeing Max and other failures highlight the need to aggressively apply DevSecOps to all development, and to challenge contractor’s economic goals against safety constraints. The issue is not technology, it’s process and oversight.

Thanks Glen, good comments… we need to crawl, walk, run here but the push is to start running first:-)

Terrific insight and perspective Marv. Thanks for sharing.

It is my understanding that the primary use cases for convolution neural networks are pattern matching, which is a shallow kind of “intelligence” shared by humans and animals. These neural networks excel at finding and classifying patterns in images (assuming robust training data has properly initialized the neuron weights). If an image in a picture is shifted, rotated, and scaled, then the “convolution” feature enables the neural network to recognize the same image. NLP works in a similar manner by converting spoken language to acoustic waves which can be processed as (time series) images and, again, the “convolution” feature allows the network to perform pattern-matching regardless of the shifted or scaled location in the time series.

For problems that cannot be represented in image form, AI technology is still immature. For example, consider this use case: Input a calculus textbook and output the solution to all problems. Abstract concepts and context cannot be readily captured and framed as inputs for an AI algorithm, nor can they be easily embedded in training data. Breakthroughs in artificial *human* intelligence require achieving levels of data processing, information correlation, and knowledge generation not found in animals.

Military success in the battlespace is not an exercise in pattern matching. Certainly there is role for (pattern-matching) AI as input to the Command-and-Control decision process, but it may produce equal parts clarity and ambiguity for the commander, particularly when the associated error/validity assessments cannot be explained by the AI engine. BTW, I am reminded of a challenge once presented to me by RADM (ret) John Gauss, where he asked me to define command and control. I don’t recall my answer, but I’m pretty sure I blathered something about data communication channels and data processing/correlation, resulting in a shared situation-awareness display. He interrupted my technobabble (thankfully) and declared “It is command of forces and control of weapons”. Sweet and pithy!

BTW, I have enjoyed your posts, and this one is no exception. My rejoinder to your post here is my opinion that we (and the Chinese) are a long way from developing AI capabilities that effectively challenges the human intellect in command and control.

Thanks Lee, great comments and good added detail. Nice to have a brainiac adding value to the discussion:-)

Many years ago, I wrote a white paper on why it is difficult to build C4I systems (http://www.dodccrp.org/events/12th_ICCRTS/CD/html/papers/061.pdf). One of the key question I posed in the paper asks whether C4I software is more difficult to write than stock market investment software. First, the goal of investment software is well-defined: Make Money, so the software requirements document is only 2 words long. Second, corporate performance data and balance sheets are readily available as input data, as are various other financial and regulatory documents. So why is it hard to write investment software or, more to the point, why isn’t AI proving its mettle in optimizing investment strategies?

The thesis of my paper is that C4I software is orders of magnitude harder to write than investment software. If AI can’t solve the “easy” problems, then……….

AI, IA

II Capt Marv! excellent point of view on the Terminology and the application of these capabilities on mission, Potentially. Thanks for leading the learning and your perspective is always modern and relevant. Cheers

Hi Marv, thank you for posting. I agree we have a ways to go before AI will dictate warfighter’s responses as they complete their missions. Warfighters are limited by edge computing capabilities to process data to facilitate the best real-time decisions. My team has been studying AI capabilities to determine where and how to best build models with existing knowledge that can enable real-time data to be processed in response to either threats or systems, with greater accuracy. However great the data crunching is, human intuition might be the greatest gift from God, separating AI and us! Time will tell. Best.

Thanks Jamieson, it still is hard to beat human intuition and knowledge!